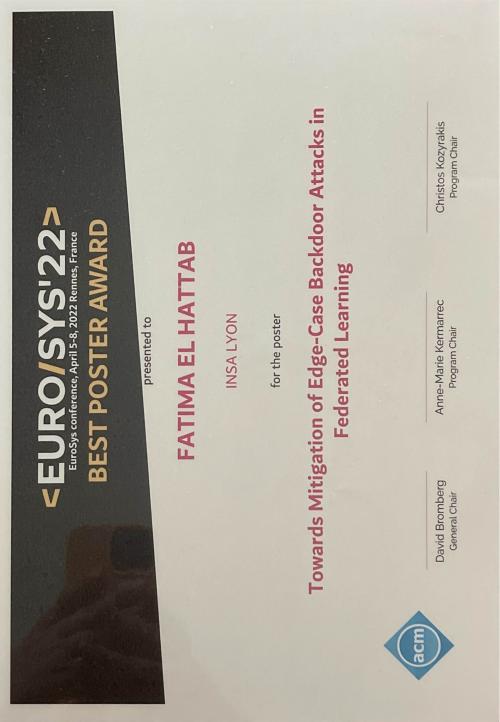

Prix "Best Poster Award" pour Fatima Elhattab

Federated Learning (FL) allows several data owners to train a joint model without sharing their training data. However, FL is vulnerable to poisoning attacks where malicious workers attempt to inject a backdoor task in the model at training time, along with the main task that the model was initially trained for. Moreover, recent works show that FL is particularly vulnerable to edge-case backdoors introduced by data points with unusual out-of-distribution features. Such attacks are among the most difficult to counter, and today’s FL defense mechanisms generally fail to tackle them. In this paper, we propose a defense mechanism called ARMOR that leverages adversarial learning to uncover edge-case backdoors. More precisely, ARMOR relies on GANs to extract data features from model updates and uses the generated samples to activate potential backdoors in the model. Furthermore, unlike most existing FL defense mechanisms, ARMOR does not require real data samples and is compatible with secure aggregation, thus, providing better FL privacy protection. Our experimental evaluations with various datasets and neural network models show that ARMOR can counter edge-case backdoors with 95% resilience against attacks without hurting model quality.

link : https://2022.eurosys.org/