Making Computer Graphics Research replicable

A recurring problem in Scientific Research is the lack of replicability of published papers. Research that cannot be reproduced by peers despite best efforts often has limited value, and thus impact, as it does not benefit to others, cannot be used as a basis for further research, and casts doubt on published results. In computer science research, research replicability is substantially improved by the availability of the source code that generated the publication data (images, graphs, computation times...). However, sharing source code is not widespread.

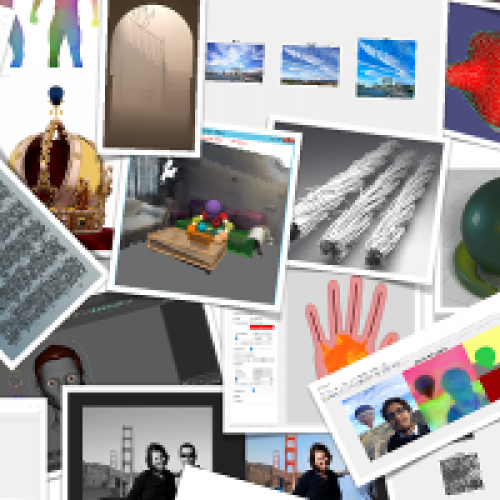

Through the analysis of 152 source codes of 374 publications accepted to previous editions of the lead conference SIGGRAPH, in 2014, 2016 and 2018, these researchers have analyzed the state of computer graphics research replicability. They tried to compile, execute and reproduce the results of these publications while keeping note of the procedure needed to make these codes work (including debug, code updates, etc...). The outcome of this vast analysis is a website gathering these observations, open to the research community to allow adding new contributions: https://replicability.graphics.

A general trend towards improvement

A first observation is that researchers tend to share their code more over time, the code sharing rate going from less than 30% in 2014 to more than 50% in 2018. Strong disparities are observed between computer graphics topics -- around 17% for fabrication publications (3D printing, optical system design...), for which contributions are often technological, to more than 60% for image publications (image and video processing, computational photography, etc.). Researchers from Academia also tend to share more their source codes than researchers from Industry. Furthermore publications with available codes are often more cited, with an increase of 56% in citations for 2014 publications.

However, it is not enough to share the code for a publication to be replicable. More than half the codes had to be modified to be able to compile them. Among recurring problems, one can cite the lack of documentation, the lack of data, and missing explicit mentions of dependency versions. Sometimes, results are produced in an obscure format (to the point that the authors of the study had to develop tools to read them). Finally, The rapid evolution of computer hardware (in particular graphics cards) and of libraries (especially in Deep Learning) make compilation of old codes difficult.

The researchers hope that this analysis will reinforce code sharing among the Computer Graphics community, but will also improve code sharing practices, to make released codes more usable.