Visual Attention for Rendered 3D Shapes

In Computer Graphics Forum (Proceedings of Eurographics 2018)

Abstract

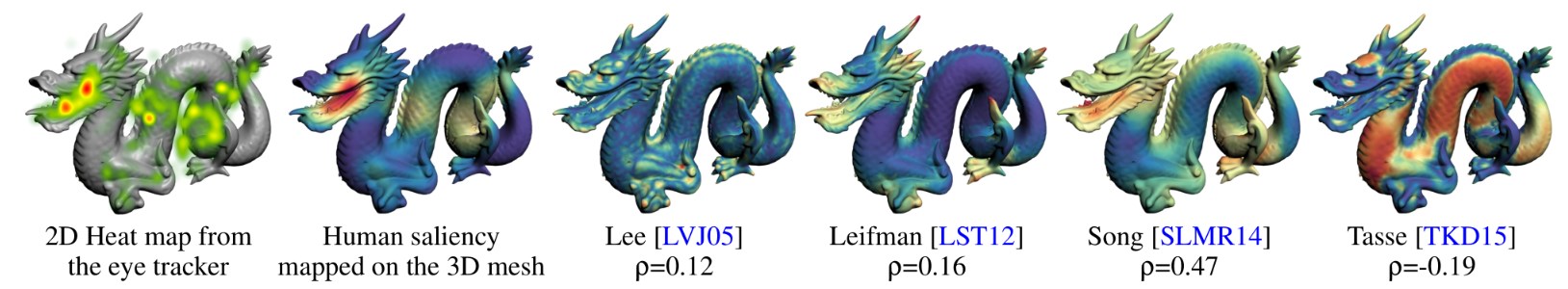

Understanding the attentional behavior of the human visual system when visualizing a rendered 3D shape is of great importance for many computer graphics applications. Eye tracking remains the only solution to explore this complex cognitive mechanism. Unfortunately, despite the large number of studies dedicated to images and videos, only a few eye tracking experiments have been conducted using 3D shapes. Thus, potential factors that may influence the human gaze in the specific setting of 3D, are still to be understood. In this work, we conduct two eye-tracking experiments involving 3D shapes, with both static and time-varying camera positions. We propose a method for mapping eye fixations (i.e., where humans gaze) onto the 3D shapes with the aim to produce a benchmark of 3D meshes with fixation density maps, which is publicly available. First, the collected data is used to study the influence of shape, camera position, material and illumination on visual attention. We find that material and lighting have a significant influence on attention, as well as the camera path in the case of dynamic scenes. Then, we compare the performance of four representative state-of-the-art mesh saliency models in predicting ground-truth fixations using two different metrics. We show that, even combined with a center-bias model, the performance of 3D saliency algorithms remains poor at predicting human fixations. To explain their weaknesses, we provide a qualitative analysis of the main factors that attract human attention. We finally provide a quantitative comparison of human-eye fixations and Schelling points and show that their correlation is weak.

Supplementary Material (37MB)

Dataset

All data can be downloaded via the following links

Simplified models (13MB) - 32 shapes with decreased resolution (OBJ format), used to compute the statistics.

Fixation maps (2MB) - ASCII files with per-vertex fixation values. 96 fixation files, one per view and per 3D shape.

Per subject fixation maps (236MB) - ASCII files with per-vertex and per-subjects fixation values (1824 files) + corresponding JPEG maps (1824 files).

Saliency maps (36MB) - ASCII files with per-vertex saliency values. 512 saliency file, one per shape, algorithm (Lee, Leifman, Song and Tasse) and blurring factor (3+no blurring).

Centricity and visibility maps (32MB) - ASCII files with per-vertex visibility values (96 files) and ASCII files with per-vertex centricity values (for 13 different Gaussian radius) (1248 files).